Step 2 - Existential Risks

While searching for definitions for the term “existential risk”, I found an interesting paper (https://www.fhi.ox.ac.uk/Existential-risk-and-existential-hope.pdf) that compares and evaluates different definitions. If you’re more interested in that, go ahead and have a look!

For me personally, the following definition is the most appropriate.

Existential Risk: An existential risk is one that threatens the premature extinction of Earth-originating intelligent life or the permanent and drastic destruction of its potential for desirable future development.

I also noticed during my research that people often talk about global catastrophic risk or existential threat. Of course, these terms can be specified more precisely, but in this project, we regard them as synonyms.

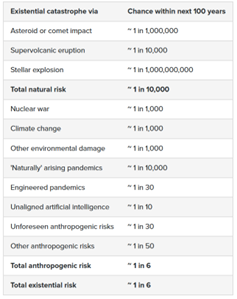

Existential Risks - Examples

Source: https://futureoflife.org/existential-risk/existential-risk/

Nuclear war was the first man-made global catastrophic risk, as a global war could kill a large percentage of the human population. As more research into nuclear threats was conducted, scientists realized that the resulting nuclear winter could be even deadlier than the war itself, potentially killing most people on earth.

Biotechnology and genetics often inspire as much fear as excitement, as people worry about the possibly negative effects of cloning, gene splicing, gene drives, and a host of other genetics-related advancements. While biotechnology provides incredible opportunity to save and improve lives, it also increases existential risks associated with manufactured pandemics and loss of genetic diversity

Artificial intelligence (AI) has long been associated with science fiction, but it’s a field that’s made significant strides in recent years. As with biotechnology, there is great opportunity to improve lives with AI, but if the technology is not developed safely, there is also the chance that someone could accidentally or intentionally unleash an AI system that ultimately causes the elimination of humanity.

If you want to gain some more and deeper knowledge about the existential risk of AI you can have a look at the webpage of a fellow student: LINK

Climate change is a growing concern that people and governments around the world are trying to address. As the global average temperature rises, droughts, floods, extreme storms, and more could become the norm. The resulting food, water and housing shortages could trigger economic instabilities and war. While climate change itself is unlikely to be an existential risk, the havoc it wreaks could increase the likelihood of nuclear war, pandemics or other catastrophes.

Again, if you want to gain deeper knowledge about the existential risk of Climate Change I recommend looking at a fellow student’s website: LINK

other existential risks

- Other emerging technologies: forms of geo-engineering and atomic manufacturing

- naturally occurring existential threats (asteroids, …)

- Engineered pandemics

- Wars

Risks